After seeing the problems of the simple RNNs seen before researchers proposed a new approach to memory-related models. The new approach was named the LSTM model.

Long Short Term Memory architecture (LSTM)

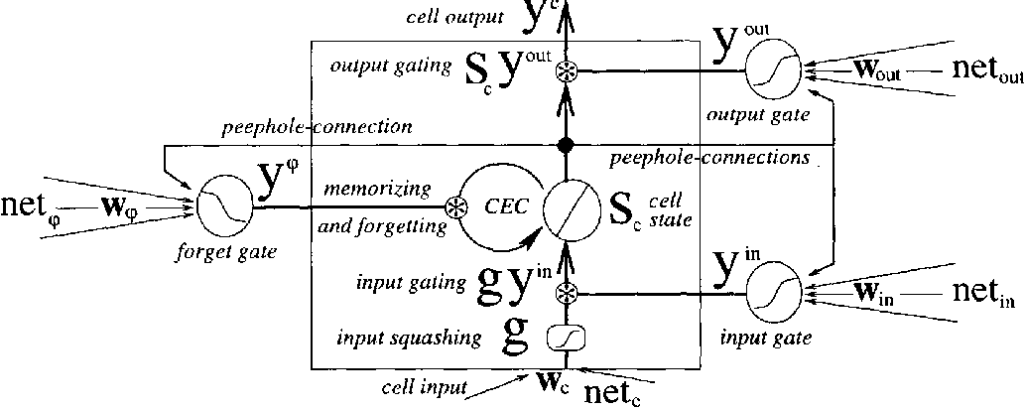

Although the LSTM models are a variant of the Recurrent Neural Networks, they have very important changes. The whole idea is to separate the cell state from the current input values.

The top horizontal line is called the state of the cell, this is considered the long-term “memory” of the cell. Another special component of the architecture are the gates. Whenever you see a multiplication sign it means that it is a gate.

Gates

In this case, they are preceded with a sigmoid activation function and the explanation for that is that the sigmoid function output goes from 0 to 1, therefore, the multiplication operation works as a gate for letting some information pass to the next stage or be forgotten. This way, the cell state can be changed and only the important things at the moment are remembered by the model.

The output

The output of the model is the value h, which can be viewed as a filtered version of the state of the cell. It is filtered at the last gate on the right, which has as input the cell state passed through a tanh function.

Other variants

Peephole connections

Introduced by Gers & Schmidhuber (2000), the peephole approach provides the gates with the cell state itself as an input.

Gated Recurrent unit (GRU)

Introduced by Cho, et al. (2014), it merges the input and the forget gates into one gate, called the “update gate”. This achieves a simpler architecture, being easier to train and computationally cheaper. This variant has gained a lot of popularity over the years.

Conclusion

The introduction of the LSTM architecture brought far more possibilities to solve problems. The model proposed, and its variants, have been used successfully with various memory-related solutions.

The next big step that will be discussed in the next article is the Attention architecture introduced by Google. An approach that, again, changed everything in the machine learning world and created many more possibilities.